The way we review code hasn’t changed much lately. Advances have often come in the form of yet another static code analyzer that tries to find potential issues based on predefined rules. Fortunately, there are several developments underway that will not only bring us new types of tools but also change how we view and interact with code changes. How will this impact our workflow? Will human review be entirely replaced by AI?

I have been working on the code review software MergeBoard and the VS Code Extension / GitHub App SemanticDiff and will share my thoughts on the future of code reviews in this blog post.

Will LLMs replace human reviews?

AI assisted programming has become popular with tools like GitHub Copilot or by simply copying snippets from ChatGPT. This trend will not stop for code reviews. There are already tools like pr-agent that attempt to automatically generate code review comments. So are we heading towards a future where human reviews are replaced by AI reviews?

I don’t think so. The whole discussion about AI based or automated reviews often reduces code reviews to finding bugs or implementing small code improvements. But that’s not the only reason why code gets reviewed. By reading the changes of our team members, we keep up with the latest developments and share knowledge. It prevents anyone from becoming a single point of failure because they are the only one familiar with a piece of code. Reviewing code also builds a sense of teamwork and prevents developers from becoming overly protective of their code. All of these benefits would be lost if we were to fully automate reviews.

Even if we reduce code reviews to accepting or rejecting changes, I don’t think large language models (LLMs) in their current form could perform this task to our satisfaction. They can only identify problems but they can’t really approve a change. That would require knowledge about the requirements, the intended architecture or domain specific constraints. While future AIs could take external documents into account, much of this information may not be available in written form and may require changes to our workflow (e.g. summarize a video call for the AI).

That doesn’t mean AI won’t have a place in the future of code review. AIs will play the same role as static code analyzers. They will report potential issues but they won’t replace human reviews. In contrast, with the rise of AI generated code, human review may become even more important. If the author simply accepts the generated code without thinking about the details or edge cases, it will be crucial that at least the reviewer verifies the code.

Tools will learn from your code and review comments

With human reviews not going away, the main focus of AI and more classical approaches will be to support our decision making. This may not sound new as we already use many tools to find potential issues (linters, security scanners, …) or assess quality (code coverage, metrics, …), but they will work differently. While our current tools usually come with a hard-coded list of rules to identify the most common problems, the next generation of tools will be able to adapt to our code base and provide more precise feedback. Here are three examples of what automation in the future will look like.

Learning by example

Every project has its own set of norms or constraints that developers need to be aware of during reviews. A kernel may not allow floating point arithmetic in some code areas, a web service may require authentication for all endpoints in a particular folder, and so on. These are not well-known rules and they may not be very obvious to developers joining a team. In short, these are typical error patterns in your project that your static code analyzers won’t catch unless you take the time to write a plugin for them.

The rise of learning-based approaches will change this. Our code review software will be able to learn directly from our code review comments and flag more issues before a reviewer looks at the code. This will either be fully automated or we will have the ability to generate rules from fixup commits. Over time, our automated tools will become more attuned to our specific project and therefore better at providing valuable feedback.

Code consistency

There is another way how tools can help us identify potential issues, even without specific rules or review comments: code consistency. If you solve something differently than all the existing code does, you either have a very good reason for doing so or you have missed something. If you use Coverity from Synopsys, you may have already seen such a feature in action:

Calling “FooBar” without checking return value (as is done elsewhere 20 out of 25 times).

I think this approach will become more widespread and generic. Verifying the consistency of newly checked-in code is a great way to provide project specific feedback right from the start. It will also help improve code maintainability and reduce code cloning.

Code suggestions

We are used to tools that identify problems in our changes but leave the task of actually fixing them to the developer. With the help of large language models, more and more tools will be able to make suggestions that can be applied directly. No need to check out the branch, amend the commit and push a new version. This may also come in the form of a bot that can even act upon human review comments. As with code generation, such an approach will have its limitations, but the ability to directly select a suggested solution will certainly be an advantage.

What will tomorrow’s diff look like?

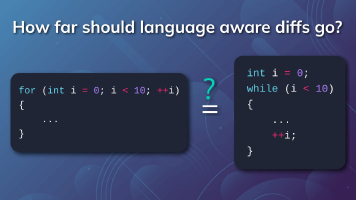

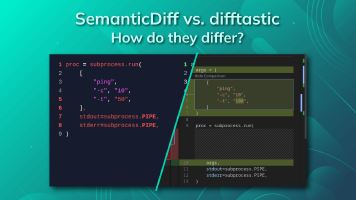

While better automation may reduce our workload somewhat, we will still spend a significant amount of time looking at code changes. Fortunately, there is some development in this area as well. To reduce the time it takes to understand code changes, our diff tools need to get smarter and stop treating code as arbitrary text. By comparing the parsed code, they will be able to give us much more precise information than just saying that a line was added or removed. This trend has already begun with tools like Difftasic, diffsitter or our own software SemanticDiff. I think this trend will not only receive a broader adoption, but will also bring some new exciting features:

Hiding irrelevant changes

Most teams have already come to the conclusion that arguing about code style during reviews is a waste of time. I think we will expand on that idea by declaring other changes irrelevant as well. But this time we won’t just mentally ignore them, our diff tools will provide options to directly hide them. This may start with simple cases such as converting decimal numbers to hex or replacing single quotes with double quotes. The next step will be more complex changes like upgrading your code to newer language features. The goal will be to stop focusing on changes that have no effect on the program logic. You can get a preview of what this might look like by comparing this Github commit with our diff and see how all formatting changes are ignored.

Grouping changes & guided reviews

In a perfect world, each small change would get its own commit, but in practice this is not always feasible. Often there is a mix of small refactorings and newly added code. Wouldn’t it be great if your diff tool could directly detect these refactorings and group them together? Why should you review every occurrence of a renamed variable when your diff tool has an option to hide this rename? You would probably just scroll over the occurrences anyway and possibly miss other small changes in that area.

I think we are moving towards a sort of divide and conquer approach to refactorings. Instead of looking at one big diff with all the changes mixed together, we either review each refactoring separately or iteratively remove them from the diff until only the remaining changes are visible. We don’t have to stop at refactorings either, we can also try to split up unrelated changes and show them separately.

With the changes split into logical chunks a diff tool can also guide the review experience. One option would be to display everything in a dependency based order, e.g. display the function definition first before it is used. Another option would be to do some sort of impact analysis and show changes that have a large impact on the program or that are security critical first.

Structural merges

The next logical step will be to use the advanced change tracking not only to generate better diffs but also to resolve merge conflicts. A tool that understands that you have added a parameter has a much better chance of resolving a merge conflict than one that only knows that you have edited a line. Because these tools need to know the grammar of the programming language, they can tell right away whether a merge would be possible, or whether it would result in syntactically incorrect code.

Conclusion

I think there are a lot of interesting developments going on right now that will have a significant impact on the way we review code. We will still do manual reviews (and that is a good thing!), but we will get better support for finding issues and our diff tools will make it easier for us to understand what has changed. Do you agree with my speculations or do you have a different opinion? Let me know in the comments :-).